It can be useful to periodically check when each database on a server was last backed up. The easiest way to do this on a single database is to right click on the database in SQL Server Management Studio (SSMS) and looking at the top of the Database Properties page (see the screenshot below).

However when there are several databases to check this can be quite labourious. SSMS actually uses the system table backupset to populate this part of the Properties page (you can verify this by running SQL Profiler just before opening the page).

However when there are several databases to check this can be quite labourious. SSMS actually uses the system table backupset to populate this part of the Properties page (you can verify this by running SQL Profiler just before opening the page).

I use a SQL script that uses this table along with backupmediafamily system table (to identify the file name of the backup) to query the latest backup of each type. The script query returns the most recent backup of each type, whether it's a full, transaction log, differential, filegroup or partial backup. Here is the script :

;WITH CTE_Backup AS

(

SELECT database_name,backup_start_date,type,physical_device_name

,Row_Number() OVER(PARTITION BY database_name,BS.type

ORDER BY backup_start_date DESC) AS RowNum

FROM msdb..backupset BS

JOIN msdb.dbo.backupmediafamily BMF

ON BS.media_set_id=BMF.media_set_id

)

SELECT D.name

,ISNULL(CONVERT(VARCHAR,backup_start_date),'No backups') AS last_backup_time

,D.recovery_model_desc

,state_desc,

CASE WHEN type ='D' THEN 'Full database'

WHEN type ='I' THEN 'Differential database'

WHEN type ='L' THEN 'Log'

WHEN type ='F' THEN 'File or filegroup'

WHEN type ='G' THEN 'Differential file'

WHEN type ='P' THEN 'Partial'

WHEN type ='Q' THEN 'Differential partial'

ELSE 'Unknown' END AS backup_type

,physical_device_name

FROM sys.databases D

LEFT JOIN CTE_Backup CTE

ON D.name = CTE.database_name

AND RowNum = 1

ORDER BY D.name,type

(

SELECT database_name,backup_start_date,type,physical_device_name

,Row_Number() OVER(PARTITION BY database_name,BS.type

ORDER BY backup_start_date DESC) AS RowNum

FROM msdb..backupset BS

JOIN msdb.dbo.backupmediafamily BMF

ON BS.media_set_id=BMF.media_set_id

)

SELECT D.name

,ISNULL(CONVERT(VARCHAR,backup_start_date),'No backups') AS last_backup_time

,D.recovery_model_desc

,state_desc,

CASE WHEN type ='D' THEN 'Full database'

WHEN type ='I' THEN 'Differential database'

WHEN type ='L' THEN 'Log'

WHEN type ='F' THEN 'File or filegroup'

WHEN type ='G' THEN 'Differential file'

WHEN type ='P' THEN 'Partial'

WHEN type ='Q' THEN 'Differential partial'

ELSE 'Unknown' END AS backup_type

,physical_device_name

FROM sys.databases D

LEFT JOIN CTE_Backup CTE

ON D.name = CTE.database_name

AND RowNum = 1

ORDER BY D.name,type

As an aside the 'Last Database Backup' shown in SSMS does not seem to include filegroup or partial backups, only full, differential or log backups. I'm not sure why this should be.

Identifying Databases Which Haven’t Been Backed Up Recently

The above query can return a lot of data if your server has many databases, so I've modified it to produce a list of databases that have had no backups in the last 7 days (you might want to change this to a shorter period especially for production databases) :

;WITH CTE_Backup AS

(

SELECT database_name,backup_start_date,type,is_readonly,physical_device_name

,Row_Number() OVER(PARTITION BY database_name

ORDER BY backup_start_date DESC) AS RowNum

FROM msdb..backupset BS

JOIN msdb.dbo.backupmediafamily BMF

ON BS.media_set_id=BMF.media_set_id

)

SELECT D.name

,ISNULL(CONVERT(VARCHAR,backup_start_date),'No backups') AS last_backup_time

,D.recovery_model_desc

,state_desc

,physical_device_name

FROM sys.databases D

LEFT JOIN CTE_Backup CTE

ON D.name = CTE.database_name

AND RowNum = 1

WHERE ( backup_start_date IS NULL OR backup_start_date < DATEADD(dd,-7,GetDate()) )

ORDER BY D.name,type

(

SELECT database_name,backup_start_date,type,is_readonly,physical_device_name

,Row_Number() OVER(PARTITION BY database_name

ORDER BY backup_start_date DESC) AS RowNum

FROM msdb..backupset BS

JOIN msdb.dbo.backupmediafamily BMF

ON BS.media_set_id=BMF.media_set_id

)

SELECT D.name

,ISNULL(CONVERT(VARCHAR,backup_start_date),'No backups') AS last_backup_time

,D.recovery_model_desc

,state_desc

,physical_device_name

FROM sys.databases D

LEFT JOIN CTE_Backup CTE

ON D.name = CTE.database_name

AND RowNum = 1

WHERE ( backup_start_date IS NULL OR backup_start_date < DATEADD(dd,-7,GetDate()) )

ORDER BY D.name,type

This will produce a list of databases for investigation, though of course there may be a good reason for a database not being backed up, for instance it's not possible to backup database snapshots. Also if the secondary database of a log shipping configuration there's not always a need to back it up.

Missing Transaction Log Backups

Finally I've modified the above query so that it reports all databases that have the full or bulk-logged recovery model and where there hasn't been a transaction log backup in the last day :

;WITH CTE_Backup AS

(

SELECT database_name,backup_start_date,type,is_readonly,physical_device_name

,Row_Number() OVER(PARTITION BY database_name,BS.type

ORDER BY backup_start_date DESC) AS RowNum

FROM msdb..backupset BS

JOIN msdb.dbo.backupmediafamily BMF

ON BS.media_set_id=BMF.media_set_id

WHERE type='L'

)

SELECT D.name

,ISNULL(CONVERT(VARCHAR,backup_start_date),'No log backups') AS last_backup_time

,D.recovery_model_desc

,state_desc

,physical_device_name

FROM sys.databases D

LEFT JOIN CTE_Backup CTE

ON D.name = CTE.database_name

AND RowNum = 1

WHERE ( backup_start_date IS NULL OR backup_start_date < DATEADD(dd,-1,GetDate()) )

AND recovery_model_desc != 'SIMPLE'

ORDER BY D.name,type

(

SELECT database_name,backup_start_date,type,is_readonly,physical_device_name

,Row_Number() OVER(PARTITION BY database_name,BS.type

ORDER BY backup_start_date DESC) AS RowNum

FROM msdb..backupset BS

JOIN msdb.dbo.backupmediafamily BMF

ON BS.media_set_id=BMF.media_set_id

WHERE type='L'

)

SELECT D.name

,ISNULL(CONVERT(VARCHAR,backup_start_date),'No log backups') AS last_backup_time

,D.recovery_model_desc

,state_desc

,physical_device_name

FROM sys.databases D

LEFT JOIN CTE_Backup CTE

ON D.name = CTE.database_name

AND RowNum = 1

WHERE ( backup_start_date IS NULL OR backup_start_date < DATEADD(dd,-1,GetDate()) )

AND recovery_model_desc != 'SIMPLE'

ORDER BY D.name,type

I hope the above queries are of use in identifying databases where there is no recent backup in place. You really don't want to be in a position where this is only discovered when the backup is actually needed, i.e. after a disk failure or data corruption. Of course you should also be checking that your backups are valid, ideally by periodically restoring from a backup, or at the very least by checking the backups using the VERIFYONLY option (though there is really no substitute for doing an actual restore).

Script to retrieve SQL Server database backup history and no backups

Problem

There is a multitude of data to be mined from within the Microsoft SQL Server system views. This data is used to present information back to the end user of the SQL Server Management Studio (SSMS) and all third party management tools that are available for SQL Server Professionals. Be it database backup information, file statistics, indexing information, or one of the thousands of other metrics that the instance maintains, this data is readily available for direct querying and assimilation into your "home-grown" monitoring solutions as well. This tip focuses on that first metric: database backup information. Where it resides, how it is structured, and what data is available to be mined.

Solution

The msdb system database is the primary repository for storage of SQL Agent, backup, Service Broker, Database Mail, Log Shipping, restore, and maintenance plan metadata. We will be focusing on the handful of system views associated with database backups for this tip:

dbo.backupset: provides information concerning the most-granular details of the backup process

dbo.backupmediafamily: provides metadata for the physical backup files as they relate to backup sets

dbo.backupfile: this system view provides the most-granular information for the physical backup files

Based upon these tables, we can create a variety of queries to collect a detailed insight into the status of backups for the databases in any given SQL Server instance.

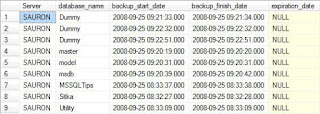

Database Backups for all databases For Previous Week

---------------------------------------------------------------------------------

--Database Backups for all databases For Previous Week

---------------------------------------------------------------------------------

SELECT

CONVERT(CHAR(100), SERVERPROPERTY('Servername')) AS Server,

msdb.dbo.backupset.database_name,

msdb.dbo.backupset.backup_start_date,

msdb.dbo.backupset.backup_finish_date,

msdb.dbo.backupset.expiration_date,

CASE msdb..backupset.type

WHEN 'D' THEN 'Database'

WHEN 'L' THEN 'Log'

END AS backup_type,

msdb.dbo.backupset.backup_size,

msdb.dbo.backupmediafamily.logical_device_name,

msdb.dbo.backupmediafamily.physical_device_name,

msdb.dbo.backupset.name AS backupset_name,

msdb.dbo.backupset.description

FROM msdb.dbo.backupmediafamily

INNER JOIN msdb.dbo.backupset ON msdb.dbo.backupmediafamily.media_set_id = msdb.dbo.backupset.media_set_id

WHERE (CONVERT(datetime, msdb.dbo.backupset.backup_start_date, 102) >= GETDATE() - 7)

ORDER BY

msdb.dbo.backupset.database_name,

msdb.dbo.backupset.backup_finish_date

Note: for readability the output was split into two screenshots.

Most Recent Database Backup for Each Database

-------------------------------------------------------------------------------------------

--Most Recent Database Backup for Each Database

-------------------------------------------------------------------------------------------

SELECT

CONVERT(CHAR(100), SERVERPROPERTY('Servername')) AS Server,

msdb.dbo.backupset.database_name,

MAX(msdb.dbo.backupset.backup_finish_date) AS last_db_backup_date

FROM msdb.dbo.backupmediafamily

INNER JOIN msdb.dbo.backupset ON msdb.dbo.backupmediafamily.media_set_id = msdb.dbo.backupset.media_set_id

WHERE msdb..backupset.type = 'D'

GROUP BY

msdb.dbo.backupset.database_name

ORDER BY

msdb.dbo.backupset.database_name

Most Recent Database Backup for Each Database - Detailed

You can join the two result sets together by using the following query in order to return more detailed information about the last database backup for each database. The LEFT JOIN allows you to match up grouped data with the detailed data from the previous query without having to include the fields you do not wish to group on in the query itself.

-------------------------------------------------------------------------------------------

--Most Recent Database Backup for Each Database - Detailed

-------------------------------------------------------------------------------------------

SELECT

A.[Server],

A.last_db_backup_date,

B.backup_start_date,

B.expiration_date,

B.backup_size,

B.logical_device_name,

B.physical_device_name,

B.backupset_name,

B.description

FROM

(

SELECT

CONVERT(CHAR(100), SERVERPROPERTY('Servername')) AS Server,

msdb.dbo.backupset.database_name,

MAX(msdb.dbo.backupset.backup_finish_date) AS last_db_backup_date

FROM msdb.dbo.backupmediafamily

INNER JOIN msdb.dbo.backupset ON msdb.dbo.backupmediafamily.media_set_id = msdb.dbo.backupset.media_set_id

WHERE msdb..backupset.type = 'D'

GROUP BY

msdb.dbo.backupset.database_name

) AS A

LEFT JOIN

(

SELECT

CONVERT(CHAR(100), SERVERPROPERTY('Servername')) AS Server,

msdb.dbo.backupset.database_name,

msdb.dbo.backupset.backup_start_date,

msdb.dbo.backupset.backup_finish_date,

msdb.dbo.backupset.expiration_date,

msdb.dbo.backupset.backup_size,

msdb.dbo.backupmediafamily.logical_device_name,

msdb.dbo.backupmediafamily.physical_device_name,

msdb.dbo.backupset.name AS backupset_name,

msdb.dbo.backupset.description

FROM msdb.dbo.backupmediafamily

INNER JOIN msdb.dbo.backupset ON msdb.dbo.backupmediafamily.media_set_id = msdb.dbo.backupset.media_set_id

WHERE msdb..backupset.type = 'D'

) AS B

ON A.[server] = B.[server] AND A.[database_name] = B.[database_name] AND A.[last_db_backup_date] = B.[backup_finish_date]

ORDER BY

A.database_name

Note: for readability the output was split into two screenshots.

Databases Missing a Data (aka Full) Back-Up Within Past 24 Hours

At this point we've seen how to look at the history for databases that have been backed up. While this information is important, there is an aspect to backup metadata that is slightly more important - which of the databases you administer have not been getting backed up. The following query provides you with that information (with some caveats.)

-------------------------------------------------------------------------------------------

--Databases Missing a Data (aka Full) Back-Up Within Past 24 Hours

-------------------------------------------------------------------------------------------

--Databases with data backup over 24 hours old

SELECT

CONVERT(CHAR(100), SERVERPROPERTY('Servername')) AS Server,

msdb.dbo.backupset.database_name,

MAX(msdb.dbo.backupset.backup_finish_date) AS last_db_backup_date,

DATEDIFF(hh, MAX(msdb.dbo.backupset.backup_finish_date), GETDATE()) AS [Backup Age (Hours)]

FROM msdb.dbo.backupset

WHERE msdb.dbo.backupset.type = 'D'

GROUP BY msdb.dbo.backupset.database_name

HAVING (MAX(msdb.dbo.backupset.backup_finish_date) < DATEADD(hh, - 24, GETDATE()))

UNION

--Databases without any backup history

SELECT

CONVERT(CHAR(100), SERVERPROPERTY('Servername')) AS Server,

master.dbo.sysdatabases.NAME AS database_name,

NULL AS [Last Data Backup Date],

9999 AS [Backup Age (Hours)]

FROM

master.dbo.sysdatabases LEFT JOIN msdb.dbo.backupset

ON master.dbo.sysdatabases.name = msdb.dbo.backupset.database_name

WHERE msdb.dbo.backupset.database_name IS NULL AND master.dbo.sysdatabases.name <> 'tempdb'

ORDER BY

msdb.dbo.backupset.database_name

Now let me explain those caveats, and this query. The first part of the query returns all records where the last database (full) backup is older than 24 hours from the current system date. This data is then combined via the UNION statement to the second portion of the query. That second statement returns information on all databases that have no backup history. I've taken the liberty of singling tempdb out from the result set since you do not back up that system database. It is recreated each time the SQL Server services are restarted. That is caveat #1. Caveat #2 is the arbitrary value I've assigned to the aging value for databases without any backup history. I've set that value at 9999 hours because in my environment I want to place a higher emphasis on those databases that have never been backed up.

Using this final query I produce a report via SQL Server Reporting Services that is distributed to the DBA Team on a daily basis that highlights any missed backups. That, however, is for another tip.

SQL SERVER – Finding Last Backup Time for All Database

Here is the quick script I use find last backup time for all the database in my server instance.

|

|

SELECT sdb.Name AS DatabaseName,

COALESCE(CONVERT(VARCHAR(12), MAX(bus.backup_finish_date), 101),'-') AS LastBackUpTimeFROM sys.sysdatabases sdb

LEFT OUTER JOIN msdb.dbo.backupset bus ON bus.database_name = sdb.name

GROUP BY sdb.Name

|

Identify when a SQL Server database was restored, the source and backup date

Problem

After restoring a database your users will typically run some queries to verify the data is as expected. However, there are times when your users may question whether the restore was done using the correct backup file. In this tip I will show you how you can identify the file(s) that was used for the restore, when the backup actually occured and when the database was restored.

Solution

The restore information is readily available inside the msdb database, making the solution as easy as a few lines of T-SQL.

When I ask people about how they verify their database restores I often get back a response that includes something similar to the following code:

RESTORE VERIFYONLY FROM DISK = 'G:\dbname.bak'

The above command simply returns this message when successful: "The backup set on file 1 is valid." Is that really useful for your end user that is complaining that the data is not correct? Chances are their complaint is not about if the backup set was valid, but more specifically it is about your selection of the backup file, or the timing of the backup itself.

If the backup was done at the wrong time, or if you restored from the wrong backup file, then the end user may be seeing exactly that problem while reviewing the data. So, how do you provide some proof that you did the restore from the correct backup file? The following script can give you this information.

SELECT [rs].[destination_database_name],

[rs].[restore_date],

[bs].[backup_start_date],

[bs].[backup_finish_date],

[bs].[database_name] as [source_database_name],

[bmf].[physical_device_name] as [backup_file_used_for_restore]

FROM msdb..restorehistory rs

INNER JOIN msdb..backupset bs

ON [rs].[backup_set_id] = [bs].[backup_set_id]

INNER JOIN msdb..backupmediafamily bmf

ON [bs].[media_set_id] = [bmf].[media_set_id]

ORDER BY [rs].[restore_date] DESC

The script will return the following result set:

|

Column Name

|

Description

|

|

destination_database_name

|

The name of the database that has been restored.

|

|

restore_date

|

The time at which the restore command was started.

|

|

backup_start_date

|

The time at which the backup command was started.

|

|

backup_finish_date

|

The time at which the backup command completed.

|

|

source_database_name

|

The name of the database after it was restored.

|

|

backup_file_used_for_restore

|

The file(s) that the restore used in the RESTORE command.

|

Here is a screenshot of a sample result set returned by the script.